…Two weavers who promise an Emperor a new suit of clothes invisible to those unfit for their positions or incompetent. When the Emperor parades before his subjects in his new clothes, [only] a child cries out, "But he isn’t wearing anything at all!". [Everyone else was was too scared to say anything.] [3]

Something that I have noticed about the software development industry is that people will go to extraordinary lengths to author the most well structured software system. This includes the utilisation of wonderful design patterns from the original Gang of Four in addition to new contemporary additions; the surprising new stage of developers not only adding test cases but also creating them themselves in the form of NUnit or MSTest and adding them to their project; the use of object-relational mapping (ORM) technologies and more recently, the use of something supposedly new called inversion of control [+ dependency injection] (IoC DI)[1].

The consequence of all of this leads to not just an improved working experience for the developer, but also creates software that is better structured, designed, loosely-coupled and obviously testable. Developers have been wonderful in their approach to ‘let’s stop for a minute and do this better’ mentality and history has shown that all these new tactics have been quite successful leading to well-rounded reliable software.

However, in order for many of the above mentioned technologies/patterns/practises to work, generally requires developers to resort to doing something quite disturbing, something that requires complicated procedures; something that is error prone; something that can be a security risk; and in some cases something that can lead to application instability.

I speak of course is the practice of developers hand-editing XML files for the purpose of configuring a technology or 3rd party system that is either a framework or library. XML when used right can be rather wonderful, I fully support it for scenarios such as data interoperability, transformations, SOAP messaging and so on. I have no problem with a configuration tool or system saving application settings to XML, just so long the user experience is not hand-edited-XML-first. Contrary to widespread belief, XML is not human readable, I doubt your English-speaking grandmother will be able to decipher it. Nor is XML self-correcting – just try deleting a ‘>’ and see what happens. This is why it should never be used for manual editing regardless of skill, I doubt anyone can claim 100% hand-editing without errors.

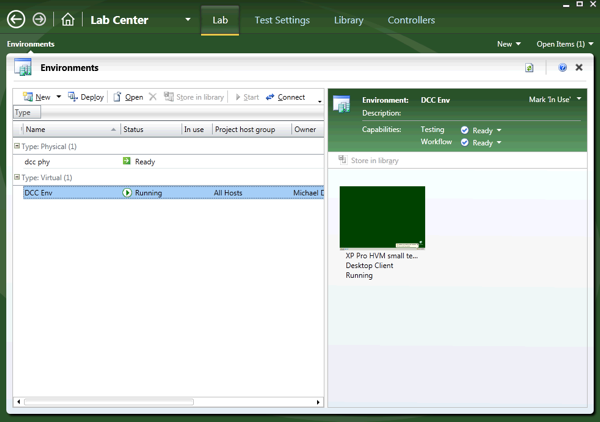

Libraries such as NHibernate require configuration before it can be used – fair enough, but to force developers to manually tweak XML configuration files is not only time consuming it is also error prone. I believe there are now tools to generate the XML files.

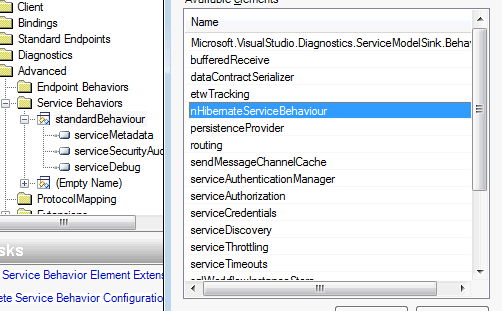

IoC systems such as StructureMap also require configuration. Most uses I have seen is via hand-edited XML (though I believe runtime calls are also available). The issue here is that configuration for SM is subject to be:

Then there are the technologies that take XML to heart literally, the authors being fan boys of XML so much they include it in the name of their system. The bizarre installer technology Wix and Microsoft’s XAML are two examples. Wix offers developers questionable value whilst at the same time opens up a whole new set of problems for installer developers:

Shame on you Visual Studio users, have you not seen the Setup project wizards? Click a few buttons and away you go. You can even do custom build actions in type-safe .NET should the need arise!

Don’t get me started on XAML. Whoever thought programming in a data-structure file format was a good idea has issues. Hand-edited XSLT is confusing enough. I get the impression that Microsoft wanted to release an alternative to Windows Forms development but instead of providing a proper editor they just deployed XAML and said “…there you go! have fun!”. we certainly got fooled into thinking hand-editing XAML is pretty neat.

Personally I feel Wix and XAML share many traits, they both represent a backward step for the developer experience; crazy programming in a data-structure mentality; and also highlight a lack of a good IDE tool.

On the subject of Microsoft, their original sin was to allow or to give the impression to developers that the creation, deployment and hand-editing of xxx.exe.config files is the norm. This practice remains to this day even surprisingly after Vista Development Guidelines stipulate that no files should be written to in .\Program Files by the application after the application has been installed (ignoring patches). So you shouldn’t be updating xxx.exe.config files there. Yeah I know there is the file virtualisation thing, but then again I can call WriteProfileString to write to the Win.ini file. It does not mean that because you can do something, that you should do something.

Microsoft followed this up by allowing .NET Remoting and later WCF configuration to be, by default, persisted to the application’s xxx.exe.config file. Again it’s bad form (see my prior post) because a user can easily change application settings that can have huge ramifications on your application. Case in point – WCF allows you to specify a transport provider as well as transport attributes. A user can change your finely-tuned, well tested and approved TCP/IP binary, no encryption, SOAP message settings to say https and now suddenly your application’s message size has grown considerably. Not only that, because the user indicated either consciously or though accident WS-R, it’s no longer a one way message but perhaps up to four or so!

The innocent editing of a plain-view configuration file can have major ramifications for not just application behaviour but also application performance! A GUI Configuration tool provides a rich user experience – it warns the user; it usually has some form of online help. A XML config file does not!

Some of the technologies I mentioned have tried to clean their act up, unfortunately, the damage has been done perhaps regardless of the good intentions of follow-up tools in reducing the XML hand-editing. However, people continue to hand-edit XML even with the knowledge of richer API counter-parts or the use of additional helper tools.

So I think or perhaps hope that developers are aware that providing complex configuration via hand-edited XML or to program in XML-related data structures is not really ideal, it’s a bit like the Hans Christian Andersen story – The Emperor’s New Clothes.

Most of us know its not really a good idea but we don’t do anything about it.

Until next time…

—————————————————-

[1] IoC DP is nothing new, anyone familiar with COM Shell Namespace Extensions plug-ins development (that has pretty much not changed since Windows 95) will see similarities. Microsoft Management Console snap-ins is another example. I’m quite sure avid readers will find other examples that predate my computer lifetime. IoC incidentally merely means the API calls into your code, a button click event is a good example.

[2] because types and methods must generally be exposed publically for SM IoCDP to work, together that most configuration is via a XML config file, a reasonably skilled malicious user can alter your config to call a different type or member thus changing the behaviour of your system in an unforseen way. e.g. You might have accidently exposed a type or method publically thus allowing the malicious user to use that type instead. This type may offer similar functionality but could lead to a drastic outcome. Perhaps this type was for testing purposes only.

[3] wikipedia – http://en.wikipedia.org/wiki/The_Emperor%27s_New_Clothes

![]()