“simplicity is the ultimate sophistication”

– Leonardo da Vinci

Stack Overflow is a popular website for Unity developers to post questions about all things Unity-developing-wise. At the time of writing there is approximately 113,000 posts of which about 2,640 are concerned with my biggest hate in the Unity API – coroutines specifically WaitForSeconds().

The Unity coroutine API’s StartCoroutine() and WaitForSeconds() are two methods that when used together are a popular approach (usually by novices) for executing steps at specific times whilst a game is running.

First an example. Here the code is essentially implementing an orchestration to transform an object over a period of time, a rudimentary animation if you will:

void Start()

{

StartCoroutine(DoStuff());

}

IEnumerator DoStuff()

{

// Rotate 45 degrees

transform.Rotate(new Vector3(45, 0, 0), Space.World);

// Wait for 3 seconds

yield return new WaitForSeconds(3);

// Rotate 20 deg

transform.Rotate(new Vector3(20, 0, 0), Space.World);

// Wait for 2 seconds

yield return new WaitForSeconds(2);

// Move forward 50 metres

transform.Translate(Vector3.forward * 50);

}There are various problems with this approach:

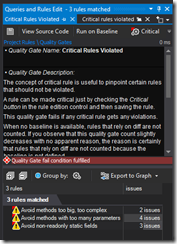

- Novices are given the false impression that Unity coroutines are magically executed behind the scenes and anything they do won’t impact the frame rate of the game. This is far from the truth as coroutines are multiplexd and executed over a series of frames and piggy-backed on the UI thread! So the frame rate is at the mercy of the bigest chunk of a coroutine’s step with the longest for-loop, slowest I/O operation or texture load

- If used incorrectly (which is very easy to do) is akin to .NET’s

Application.DoEvents()whereby your application can suffer from re-entrancy.1 Quite often I see developers reporting problems with their game exhibiting slow frame rates and using excessive memory. The cause is usually unguarded coroutine spawning. i.e. not checking whether the coroutine is already running. When this happens inside sayUpdate()it is a recipe for disaster. - It is a misuse of .NET’s

IEnumerableandyield returnpattern

On this last point, Cory Nelson on Stack Overflow says it best:

Developers do not expect iterating an

IEnumerableto change a program’s state. It’s not whatIEnumerableis intended for and is an inconsistent behavior with all of .NET.What Unity is doing is using

Cory Nelson, former developer at Microsoft, “Why shouldn’t I ever use coroutines?”, https://stackoverflow.com/a/35817077/585968yield returnas a pseudo-coroutine, and it’s going to cause confusion for any devs unfamiliar with that.

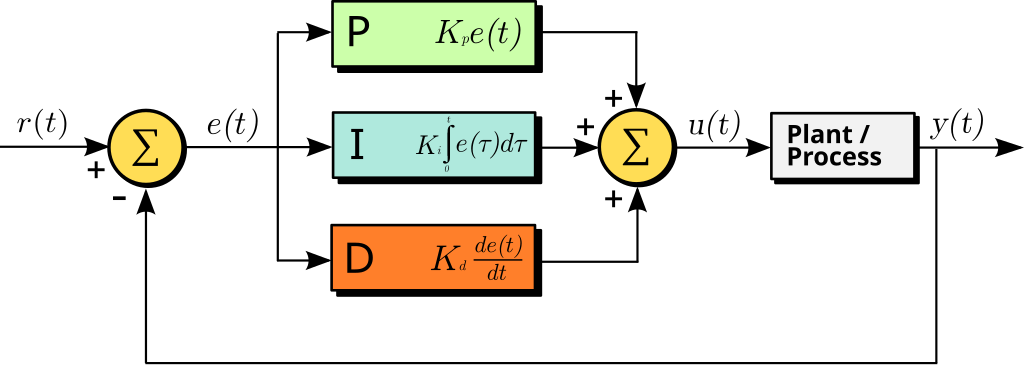

Alternative

In most cases the orchestrations need to wait for a time interval to pass before proceeding. The simplest approach, one that can be applied to whatever game engine you are using is to take note of the time at the beginning and then measure elapsed time since the start.

e.g.

private static float MyDelay = 1f; // One second

DateTime _start;

void Start()

{

_start = DateTime.Now;

}

void Update()

{

var elapsed = Now - _start;

if (elapsed.TotalSeconds > MyDelay)

{

// do something

_start = DateTime.Now; // Prepare for next interval

}

}If you are concerned with DateTime being too heavy you can use Environment.TickCount.

Here’s a helper class I like to use:

public class Delay

{

private float _lastInterval;

/// <summary>

/// The timeout in seconds

/// </summary>

/// <param name="timeout"></param>

private Delay(float timeout)

{

Timeout = timeout;

_lastInterval = Time.realtimeSinceStartup;

}

public float Timeout { get; }

public bool IsTimedOut => Time.realtimeSinceStartup > _lastInterval + Timeout;

public void Reset()

{

_lastInterval = Time.realtimeSinceStartup;

}

public static Delay StartNew(float delayInSeconds)

{

return new Delay(delayInSeconds);

}

}…and use it like so:

public class JetFighterHud: MonoBehaviour

{

private static readonly int DefaultUpdateFramesPerSecond = 15;

[SerializeField]

[Range(1,60), Tooltip("How often the display is updated in frames/second")]

private int refreshRate = DefaultUpdateFramesPerSecond;

private Delay _delay;

private void Start()

{

_delay = Delay.StartNew(1f / refreshRate);

}

private void Update()

{

if (!_delay.IsTimedOut)

{

return;

}

// .

// do all costly drawing here

// .

// Get ready for next period

_delay.Reset();

}

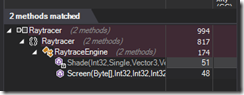

}I also use it in my workflows for my flight simulator. Here’s an extract for a TakeoffWorkflow:

protected override void OnUpdate()

{

if (!_stateMachine.IsTimedOut)

{

return;

}

//Tracer.Info($"{State}");

switch (State)

{

case States.Idle:

if (_stepDelay.IsTimedOut)

{

State = States.PreStartup;

}

break;

case States.PreStartup:

if (!_aircraftLightsManager.enabled)

{

_aircraftLightsManager.enabled = true;

Tracer.LogInformation("NAV lights enabled");

}

if (_stepDelay.IsTimedOut)

{

State = States.Startup;

}

break;

case States.Startup:

Vtol.SetActive(true);

Tracer.LogInformation("VTOL active");

_engineAudio.Play();

_engineAudio.loop = true;

_throttle = 0;

Tracer.LogInformation("Engines on");

State = States.StartingUp;

break;

case States.StartingUp:

_throttle = Mathf.MoveTowards(_throttle, 1, 0.9f * Time.deltaTime);

var shifted = Mathf.Lerp(0.5f, 1, _throttle);

_pitchShiftSetting.Level = shifted;

_pitchShiftSetting.Apply();

if (_throttle >= 1)

{

_harrierThrusterAnimation.enabled = true;

Tracer.LogInformation("Thrusters on");

_stepDelay = Delay.StartNew(3);

State = States.Started;

}

break;

case States.Started:

if (_stepDelay.IsTimedOut)

{

State = States.Taxi;

}

break;

case States.Taxi:

if (_stepDelay.IsTimedOut)

{

State = States.Takeoff;

}

break;

case States.Takeoff:

_vtolSoundFx.enabled = true;

_altAutopilot.Engage = true;

_exhaustRoot.SetActive(true);

Tracer.LogInformation("ALT enabled");

State = States.Climb;

break;

case States.Climb:

break;

}

_stateMachine.Reset();

}[1] This is a nasty condition typically in GUI applications whereby during execution of say CalculatePrimeNumbers_Clicked(), (an event-handler that takes a few minutes to run) in an act of desperation, the event handler calls Application.DoEvents() to preserve GUI responsiveness. Unfortunately Application.DoEvents() will process the message pump (remember we are already in the middle of a process step) and if the user happens to click the same button again then CalculatePrimeNumbers_Clicked()will again be invoked. It actually doesn’t matter what they click. This can lead to corruption of application state in exactly the same way as unsafe multithreaded code can.

You must be logged in to post a comment.